ResNet is one of the most powerful deep neural networks which has achieved fantabulous performance results in the ILSVRC 2015 classification challenge. ResNet has achieved excellent generalization performance on other recognition tasks and won the first place on ImageNet detection, ImageNet localization, COCO detection and COCO segmentation in ILSVRC and COCO 2015 competitions. There are many variants of ResNet architecture i.e. same concept but with a different number of layers. We have ResNet-18, ResNet-34, ResNet-50, ResNet-101, ResNet-110, ResNet-152, ResNet-164, ResNet-1202 etc. The name ResNet followed by a two or more digit number simply implies the ResNet architecture with a certain number of neural network layers. In this post, we are going to cover ResNet-50 in detail which is one of the most vibrant networks on its own.

Although the object classification problem is a very old problem, people are still solving it to make the model more robust. LeNet was the first Deep Neural Network that came into existence in 1998 to solve the digit recognition problem. It has 7 layers which are stacked up one over the other to recognize the digits written in the Bank Cheques. Despite the introduction of LeNet, more advanced data such as high-resolution images can’t be used to train LeNet. Moreover, the computation power of computer systems during 1998 was very less.

OLD TRICK NEW TWIST:

The Deep Learning community had achieved groundbreaking results during the year 2012 when AlexNet was introduced to solve the ImageNet classification challenge. AlexNet has a total of 8 layers which are further subdivided into 5 convolution layers and 3 fully connected layers. Unlike LeNet, AlexNet has more filters to perform the convolution operation in each convolutional layer. In addition to the number of filters, the size of filters used in AlexNet was 11×11, 5×5 and 3×3. The number of parameters present in the AlexNet is around 62 million. The training of AlexNet was done in a parallel manner i.e. two Nvidia GPUs were used to train the network on the ImageNet dataset. AlexNet achieved 57% and 80.3% as its top-1 and top-5 accuracy respectively. Furthermore, the idea of Dropout was introduced to protect the model from overfitting. Consequently, a few million parameters were reduced from 60 million parameters of AlexNet due to the introduction of Dropout.

LET’S GET DEEPER:

Addition of layers to make the network deep.

After the AlexNet, the next champion of ImageNet (ILSVRC-2014) classification challenge was VGG-16. There are a lot of differences between AlexNet and VGG-16. Firstly, VGG-16 has more convolution layers which imply that deep learning researchers started focusing to increase the depth of the network. Secondly, VGG-16 only uses 3×3 kernels in every convolution layer to perform the convolution operation. Unlike AlexNet, the small kernels of VGG-16 can extract fine features present in images. The architecture of VGG-16 has an overall 5 blocks. The first two blocks of the network have 2 convolution layers and 1 max-pooling layer in each block. The remaining three blocks of the network have 3 convolution layers and 1 max-pooling layer. Thirdly, three fully connected layers are added after block 5 of the network: the first two layers have 4096 neurons and the third one has 1000 neurons to do the classification task in ImageNet. Therefore, the deep learning community also refers to VGG-16 as one the widest network ever built. Moreover, the number of parameters in the first two fully-connected layers of VGG-16 has around a contribution of 100 million out of 138 million parameters of the network. The final layer is the Soft-max layer. The top-1 and top-5 accuracy of VGG-16 was 71.3% and 90.1% respectively.

GOOGLENET: NETWORK IN NETWORK:

After the VGG-16 show, Google gave birth to the GoogleNet (Inception-V1): the other champion of ILSVRC-2014 with higher accuracy value than its predecessors. Unlike the prior networks, GoogleNet has a little strange architecture. Firstly, the networks such as VGG-16 have convolution layers stacked one over the other but GoogleNet arranges the convolution and pooling layers in a parallel manner to extract features using different kernel sizes. The overall intention was to increase the depth of the network and to gain a higher performance level as compared to previous winners of the ImageNet classification challenge. Secondly, the network uses 1×1 convolution operation to control the size of the volume passed for further processing in each inception module. The inception module is the collection of convolution and pooling operation performed in a parallel manner so that features can be extracted using different scales. Thirdly, the number of parameters present in the network is 24 million which makes GoogleNet a less compute-intensive model as compared to AlexNet and VGG-16. Fourthly, the network uses a Global Average Pooling layer in place of fully-connected layers. Ultimately, GoogleNet had achieved the lowest top-5 error of 6.67% in ILSVRC-2014.

STILL GRADIENTS

NOW, IT’S TIME TO TALK ABOUT THE MAIN SUBJECT OF THIS POST: THE WINNER OF ILSVRC 2015: THE DEEP RESIDUAL NETWORK

The winner of the ImageNet competition in 2015 was ResNet152 i.e. Residual Network having 152 layers variant. In this post, we will cover the concept of ResNet50 which can be generalized to any other variant of ResNet. Prior to the explanation of the deep residual network, I would like to talk about simple deep networks (networks having more number of convolution, pooling and activation layers stacked one over the other). Since 2013, the Deep Learning community started to build deeper networks because they were able to achieve high accuracy values. Furthermore, deeper networks can represent more complex features, therefore the model robustness and performance can be increased. However, stacking up more layers didn’t work for the researchers. While training deeper networks, the problem of accuracy degradation was observed. In other words, adding more layers to the network either made the accuracy value to saturate or it abruptly started to decrease. The culprit for accuracy degradation was vanishing gradient effect which can only be observed in deeper networks.

Vanishing Gradient Intuition

During the backpropagation stage, the error is calculated and gradient values are determined. The gradients are sent back to hidden layers and the weights are updated accordingly. The process of gradient determination and sending it back to the next hidden layer is continued until the input layer is reached. The gradient becomes smaller and smaller as it reaches the bottom of the network. Therefore, the weights of the initial layers will either update very slowly or remains the same. In other words, the initial layers of the network won’t learn effectively. Hence, deep network training will not converge and accuracy will either starts to degrade or saturate at a particular value. Although vanishing gradient problem was addressed using the normalized initialization of weights, deeper network accuracy was still not increasing.

What is Deep Residual Network?

Deep Residual Network is almost similar to the networks which have convolution, pooling, activation and fully-connected layers stacked one over the other. The only construction to the simple network to make it a residual network is the identity connection between the layers. The screenshot below shows the residual block used in the network. You can see the identity connection as the curved arrow originating from the input and sinking to the end of the residual block.

A Residual Block of Deep Residual Network

What is the intuition behind the residual block?

As we have learned earlier that increasing the number of layers in the network abruptly degrades the accuracy. The deep learning community wanted a deeper network architecture that can either perform well or at least the same as the shallower networks. Now, try to imagine a deep network with convolution, pooling, etc layers stacked one over the other. Let us assume that, the actual function that we are trying to learn after every layer is given by Ai(x) where A is the output function of the i-th layer for the given input x. You can refer to the next screenshot to understand the context. You can see that the output functions after every layer are A1, A2, A3, …. An.

Assumed Deep Convolutional Neural Network

In this way of learning, the network is directly trying to learn these output functions i.e. without any extra support. Practically, it is not possible for the network to learn these ideal functions (A1, A2, A3, …. An). The network can only learn the functions say B1, B2, B3, …. Bn that are closer to A1, A2, A3, …. An. However, our assumed deep network is so much worse that even it can’t learn B1, B2, B3, …. Bn which will be closer to A1, A2, A3, …. An because of the vanishing gradient effect and also due to the unsupported way of training.

The support for the training will be given by the identity mapping addition to the residual output. Firstly, let us see what is the meaning of identity mapping? In a nutshell, applying identity mapping to the input will give you the output which is the same as the input ( AI = A: where A is input matrix and I is Identity Mapping).

Traditional networks such as AlexNet, VGG-16, etc try to learn the A1, A2, A3, …. An directly as shown in the diagram of the simple deep network. In the forward pass, the input (image) is passed to the network to get the output. The error is calculated and gradients are determined and backpropagation helps the network to approximate the functions A1, A2, A3, …. An in the form of B1, B2, B3, …. Bn. The creators of ResNet thought that:

“IF THE MULTIPLE NON-LINEAR LAYERS CAN ASYMPTOTICALLY APPROXIMATE COMPLICATED FUNCTIONS, THEN IT IS EQUIVALENT TO HYPOTHESIZE THAT THEY CAN ASYMPTOTICALLY APPROXIMATE THE RESIDUAL FUNCTION”.

After reading the statement, the first question that strikes our mind is as follows:

What is a Residual Function?

The simple answer to this question is that the residual function (also known as residual mapping) is the difference between the input and output of the residual block under question. In other words, residual mapping is the value that will be added to the input to approximate the final function (A1, A2, A3, …. An) of the block. You can also assume that the residual mapping is the amount of error which can be added to input so as to reach the final destination i.e. to approximate the final function. You can visualize the Residual Mapping as shown in the next figure. You can see that the Residual Mapping is acting as a bridge between the input and the output of the block. Note that the weight layers and activation function are not shown in the diagram but they are actually present in the network.

Intuitive representation of Residual Function

Let us change our naming conventions to make this post compatible with ResNet paper. Fig-1, shows the residual block. The function which should be learned as a final result of the block is represented as H(x). The input to the block is x and the residual mapping

Why the Residual Function will work?

The creators of ResNet again thought as the statement goes:

“ RATHER THAN EXPECTING STACKED LAYERS TO LEARN TO APPROXIMATE H(x), THE AUTHORS ARE LETTING THE LAYERS TO APPROXIMATE A RESIDUAL FUNCTION i.e. F(x) = H(x) – x.”

The above statement is explaining that during training the deep residual network, the main focus is to learn the residual function i.e. F(x). So, if the network will somehow learn the difference (F(x)) between the input and output, then the overall accuracy can be increased. In other words, the residual value should be learned in a way such that it approaches zero, therefore making the identity mapping optimal. In this way, all the layers in the network will always produce the optimal feature maps i.e. the best case feature map after the convolution, pooling and activation operations. The optimal feature map contains all the pertinent features which can perfectly classify the image to its ground-truth class.

Why identity mapping will work? How does the identity connection affect the performance of the network?

During the time of backpropagation, there are two pathways for the gradients to transit back to the input layer while traversing a residual block. In the next diagram, you can see that there are two pathways: pathway-1 is the identity mapping way and pathway-2 is the residual mapping way.

Gradient Pathways in ResNet

We have already discussed the vanishing gradients problem in the simple deep network. Now we will try to explain the solution for the same keeping the identity connection in mind. Firstly, we will see how to represent the residual block F(x) mathematically?

y = F(x, {Wi}) + x

where y is the output function, x is the input to the residual block and F(x, {Wi}) is the residual block. Note that the residual block contains weight layers which are represented as Wi where 1 ≤ i ≤ number of layers in a residual block. Also, the term F(x, {Wi}) for 2 weight layers in a residual block can be simplified and can be written as follows:

F(x, {Wi}) = W2 𝛔(W1x)

where 𝛔 is the ReLU activation function and the second non-linearity is added after the addition with identity mapping i.e. H(x) = 𝛔( y ).

When the computed gradients pass from the Gradient Pathway-2, two weight layers are encountered which are W1 and W2 in our residual function F(x). The weights or the kernels in the weight layers W1 and W2 are updated and new gradient values are calculated. In the case of initial layers, the newly computed values will either become small or eventually vanish. To save the gradient values from vanishing, the shortcut connection (identity mapping) will come into the picture. The gradients can directly pass through the Gradient Pathway-1 shown in the previous diagram. In Gradient Pathway-1, the gradients don’t have to encounter any weight layer, hence, there won’t be any change in the value of computed gradients. The residual block will be skipped at once and the gradients can reach the initial layers which will help them to learn the correct weights. Also, ResNet version 1 has ReLU function after the addition operation, therefore, gradient values will be changed as soon as they are getting inside the residual block.

Basic properties and assumptions regarding the identity connection:

- The addition of the identity connection does not introduce extra parameters. Therefore, the computation complexity for simple deep networks and deep residual networks is almost the same.

- The dimensions of x and F must be the same for performing the addition operation. The dimensions can be matched by using one of the following ways:

- Extra Zero entries should be padded for increasing dimensions. This is not going to introduce any extra parameter.

- Projection shortcut can be used to match the dimensions ( 1 x 1 convolutions).

y = F(x, {Wi}) + Ws x

Architecture of ResNet-50

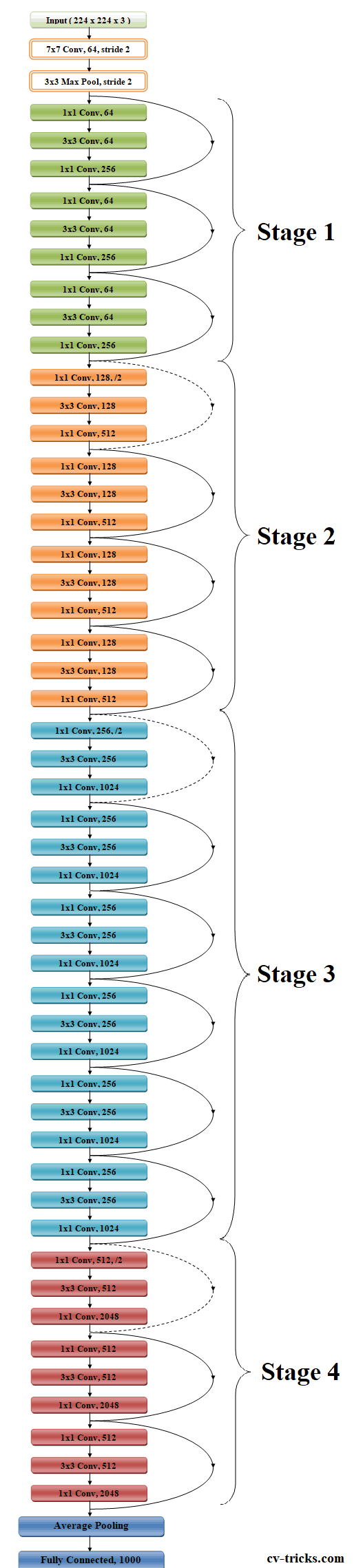

Now we’ll talk about the architecture of ResNet50. The architecture of ResNet50 has 4 stages as shown in the diagram below. The network can take the input image having height, width as multiples of 32 and 3 as channel width. For the sake of explanation, we will consider the input size as 224 x 224 x 3. Every ResNet architecture performs the initial convolution and max-pooling using 7×7 and 3×3 kernel sizes respectively. Afterward, Stage 1 of the network starts and it has 3 Residual blocks containing 3 layers each. The size of kernels used to perform the convolution operation in all 3 layers of the block of stage 1 are 64, 64 and 128 respectively. The curved arrows refer to the identity connection. The dashed connected arrow represents that the convolution operation in the Residual Block is performed with stride 2, hence, the size of input will be reduced to half in terms of height and width but the channel width will be doubled. As we progress from one stage to another, the channel width is doubled and the size of the input is reduced to half.

For deeper networks like ResNet50, ResNet152, etc, bottleneck design is used. For each residual function F, 3 layers are stacked one over the other. The three layers are 1×1, 3×3, 1×1 convolutions. The 1×1 convolution layers are responsible for reducing and then restoring the dimensions. The 3×3 layer is left as a bottleneck with smaller input/output dimensions.

Finally, the network has an Average Pooling layer followed by a fully connected layer having 1000 neurons (ImageNet class output).

Architecture diagram of ResNet50

Let’s code ResNet50 in Keras

We will train the ResNet50 model in the Cat-Dog dataset. We will write the code from loading the model to training and finally testing it over some test_images. The full code and the dataset can be downloaded from this link. You have to make sure that keras is installed in your system.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 |

# Importing the important libraries from keras.applications.resnet50 import ResNet50 from keras.layers import Dense, GlobalAveragePooling2D from keras.models import Model from keras.optimizers import SGD from keras.preprocessing.image import ImageDataGenerator # Download the architecture of ResNet50 with ImageNet weights base_model = ResNet50(include_top=False, weights='imagenet') # Taking the output of the last convolution block in ResNet50 x = base_model.output # Adding a Global Average Pooling layer x = GlobalAveragePooling2D()(x) # Adding a fully connected layer having 1024 neurons x = Dense(1024, activation='relu')(x) # Adding a fully connected layer having 2 neurons which will # give the probability of image having either dog or cat predictions = Dense(2, activation='softmax')(x) # Model to be trained model = Model(inputs=base_model.input, outputs=predictions) # Training only top layers i.e. the layers which we have added in the end for layer in base_model.layers: layer.trainable = False # Compiling the model model.compile(optimizer=SGD(lr=0.0001, momentum=0.9), loss='categorical_crossentropy', metrics = ['accuracy']) # Creating objects for image augmentations train_datagen = ImageDataGenerator(rescale = 1./255, shear_range = 0.2, zoom_range = 0.2, horizontal_flip = True) test_datagen = ImageDataGenerator(rescale = 1./255) # Proving the path of training and test dataset # Setting the image input size as (224, 224) # We are using class mode as binary because there are only two classes in our data training_set = train_datagen.flow_from_directory('training_set', target_size = (224, 224), batch_size = 32, class_mode = 'categorical') test_set = test_datagen.flow_from_directory('test_set', target_size = (224, 224), batch_size = 32, class_mode = 'categorical') # Training the model for 5 epochs model.fit_generator(training_set, steps_per_epoch = 8000, epochs = 5, validation_data = test_set, validation_steps = 2000) # We will try to train the last stage of ResNet50 for layer in base_model.layers[0:143]: layer.trainable = False for layer in base_model.layers[143:]: layer.trainable = True # Training the model for 10 epochs model.fit_generator(training_set, steps_per_epoch = 8000, epochs = 10, validation_data = test_set, validation_steps = 2000) # Saving the weights in the current directory model.save_weights("resnet50_weights.h5") # Predicting the final result of image import numpy as np from keras.preprocessing import image test_image = image.load_img('cat_or_dog_test.jpg', target_size = (224, 224)) test_image = image.img_to_array(test_image)\ # Expanding the 3-d image to 4-d image. # The dimensions will be Batch, Height, Width, Channel test_image = np.expand_dims(test_image, axis = 0) # Predicting the final class result = model.predict(test_image)[0].argmax() # Fetching the class labels labels = training_set.class_indices labels = list(labels.items()) # Printing the final label for label, i in labels: if i == result: print("The test image has: ", label) break |

ResNet – V2

Till now we have discussed the ResNet50 version 1. Now, we will discuss the ResNet50 version 2 which is all about using the pre-activation of weight layers instead of post-activation. The figure below shows the basic architecture of the post-activation (original version 1) and the pre-activation (version 2) of versions of ResNet.

ResNet V1 and ResNet V2

The major differences between ResNet – V1 and ResNet – V2 are as follows:

- ResNet V1 adds the second non-linearity after the addition operation is performed in between the x and F(x). ResNet V2 has removed the last non-linearity, therefore, clearing the path of the input to output in the form of identity connection.

- ResNet V2 applies Batch Normalization and ReLU activation to the input before the multiplication with the weight matrix (convolution operation). ResNet V1 performs the convolution followed by Batch Normalization and ReLU activation.

Difference between ResNet V1 and ResNet V2

The ResNet V2 mainly focuses on making the second non-linearity as an identity mapping i.e. the output of addition operation between the identity mapping and the residual mapping should be passed as it is to the next block for further processing. However, the output of the addition operation in ResNet V1 passes from ReLU activation and then transferred to the next block as the input.

When the function ‘f’ is an identity function the signal can be directly propagated between any two units. Also, the gradient value calculated at the output layer can easily reach the initial layer without any change in signal.

Key Features of ResNet:

- ResNet uses Batch Normalization at its core. The Batch Normalization adjusts the input layer to increase the performance of the network. The problem of covariate shift is mitigated.

- ResNet makes use of the Identity Connection, which helps to protect the network from vanishing gradient problem.

- Deep Residual Network uses bottleneck residual block design to increase the performance of the network.\

References

-

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. {arXiv link}

- Identity Mappings in Deep Residual Networks {arXiv link}