Part-1: Basics of TensorFlow:

|

1 2 3 4 5 6 7 |

sankit@sankit:~$ python Python 2.7.6 (default, Oct 26 2016, 20:30:19) [GCC 4.8.4] on linux2 Type "help", "copyright", "credits" or "license" for more information. >>> >>> import tensorflow as tf |

|

1 2 |

graph = tf.get_default_graph() |

graph.get_operations()

Currently, the output is empty as shown by [], as there is nothing in the graph.

If you want to print to name of the operations in the graph, do this:

|

1 2 3 |

for op in graph.get_operations(): print(op.name) |

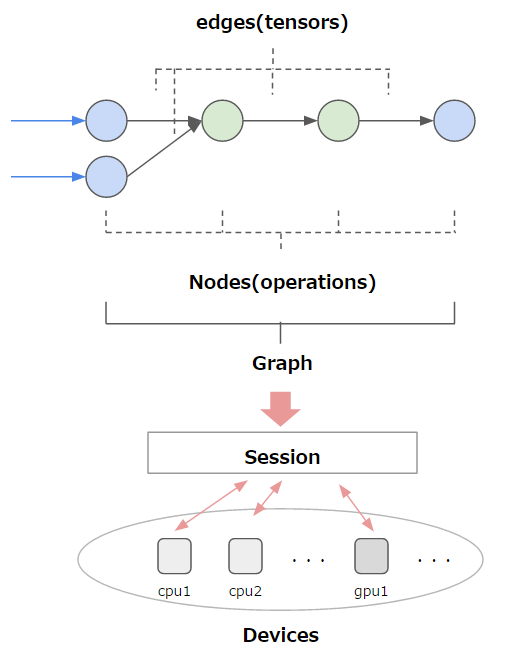

(ii) TensorFlow Session:

Graph only defines the computations or builds the blueprint. However, there are no variables, no values unless we run the graph or part of the graph within a session.

You can create a session like this:

|

1 2 3 4 5 |

sess=tf.Session() ... your code ... ... your code ... sess.close() |

Whenever, you open a session, you need to remember to close it. Or you can use ‘with block’ like this.

|

1 2 3 |

with tf.Session() as sess: sess.run(f) |

|

1 2 3 4 5 6 |

a=tf.constant(1.0) a <tf.Tensor'Const:0' shape=() dtype=float32> print(a) Tensor("Const:0", shape=(), dtype=float32) |

|

1 2 3 |

with tf.Session() as sess: print(sess.run(a)) |

This will produce 1.0 as output.

|

1 2 3 4 |

>>>b = tf.Variable(2.0,name="test_var") >>>b <tensorflow.python.ops.variables.Variable object at 0x7f37ebda1990> |

>>>init_op = tf.initialize_all_variables()>>>init_op = tf.global_variables_initializer()|

1 2 3 4 |

with tf.Session() as sess: sess.run(init_op) print(sess.run(b)) |

This will output 2.0

|

1 2 3 4 |

graph = tf.get_default_graph() for op in graph.get_operations(): print(op.name) |

This will now output:

Const

test_var/initial_value

test_var

test_var/Assign

test_var/read

init

As you can see, we have declared ‘a’ as Const so this has been added to the graph. Similarly, for the variable b, many ‘test_var’ states have been added to the TensorFlow graph like test_var/initial_value, test_var/read etc. You can visualize the complete network using TensorBoard, which is a tool to visualize a TensorFlow graph and training process.

|

1 2 3 4 5 6 7 8 9 10 11 12 |

>>>a = tf.placeholder("float") >>>b = tf.placeholder("float") >>>y = tf.multiply(a, b) // Earlier this used to be tf.mul which has changed with Tensorflow 1.0 //Typically we load feed_dict from somewhere else, //may be reading from a training data folder. etc //For simplicity, we have put values in feed_dict here >>>feed_dict ={a:2,b:3} >>>with tf.Session() as sess: print(sess.run(y,feed_dict)) |

Output will be 6.

iv) Device in TensorFlow:

TensorFlow has very strong in-built capabilites to run your code on a gpu or a cpu or a cluster of gpu etc. It provides you options to select the device you want to run your code. However, this is not something that you need to worry about when you are just getting started. We shall write a separate tutorial on this later. So, here is the complete picture:

Part-2: Tensorflow tutorial with simple example:

In this part, we shall examine a code to run linear regression. Before that, let’s look at some of the basic TensorFlow functions that we shall use in the code.

Use random_normal to create random values from a normal distribution. In this example, w is a variable which is of size 784*10 with random values with standard deviation 0.01.

w=tf.Variable(tf.random_normal([784, 10], stddev=0.01))

|

1 2 3 4 5 |

b = tf.Variable([10,20,30,40,50,60],name='t') with tf.Session() as sess: sess.run(tf.initialize_all_variables()) sess.run(tf.reduce_mean(b)) |

|

1 2 3 4 5 6 7 8 |

a=[ [0.1, 0.2, 0.3 ], [20, 2, 3 ] ] b = tf.Variable(a,name='b') with tf.Session() as sess: sess.run(tf.initialize_all_variables()) sess.run(tf.argmax(b,1)) |

Output: a

Linear Regression Exercise:

Problem statement: In linear regression, you get a lot of data-points and try to fit them on a straight line. For this example, we will create 100 datapoints and try to fit them into a line.

a) Creating training data:

trainX has values between -1 and 1, and trainY has 3 times the trainX and some randomness.

|

1 2 3 4 5 6 |

import tensorflow as tf import numpy as np trainX = np.linspace(-1, 1, 101) trainY = 3 * trainX + np.random.randn(*trainX.shape) * 0.33 |

b) Placeholders:

|

1 2 3 |

X = tf.placeholder("float") Y = tf.placeholder("float") |

c) Modeling:

Linear regression model is y_model=w*x and we have to calculate the value of w through our model. Let’s initialize w to 0 and create a model to solve this problem. We define the cost as square of (Y-y_model). TensorFlow comes with many optimizers that calculate and update the gradients after each iteration while trying to minimize the specified cost. We are going to define the training operation as changing the values using GradientDescentOptimizer to minimize cost with a learning rate of 0.01. Later we will run this training operation in a loop.

|

1 2 3 4 5 6 |

w = tf.Variable(0.0, name="weights") y_model = tf.multiply(X, w) cost = (tf.pow(Y-y_model, 2)) train_op = tf.train.GradientDescentOptimizer(0.01).minimize(cost) |

d) Training:

Till this point, we have only defined the graph. No computation has happened.

None of the TensorFlow variables have any value. In order to run this graph, we need to create a Session and run. Before that we need to create the init_op to initialize all variables:

|

1 2 3 |

init = tf.initialize_all_variables() #init= tf.global_variables_initializer() for new tf |

|

1 2 3 4 5 6 7 |

with tf.Session() as sess: sess.run(init) for i in range(100): for (x, y) in zip(trainX, trainY): sess.run(train_op, feed_dict={X: x, Y: y}) print(sess.run(w)) |

Please note that, first thing that has been done is to initialize the variables by calling init inside session.run(). Later we run train_op by feeding feed_dict. Finally, we print the value of w(again inside sess.run() which should be around 3.

e) Exercise:

If you create a new session block after this code and try to print w, what will be the output?

|

1 2 3 4 |

with tf.Session() as sess: sess.run(init) print(sess.run(w)) |

yes, you got it right, it will be 0.0. That’s the idea of symbolic computation. Once, we have gotten out of session created earlier, all the operations cease to exist.

Hope, this tutorial gives you a good start on TensorFlow. Please feel free to ask your questions in the comments. The complete code can be downloaded from here.

You can continue learning Tensorflow in the second tutorial here.