Introduction

Photo-realistic image rendering using standard graphics techniques requires realistic simulation of geometry and light. The algorithms which we use currently for the task are effective but expensive. If we were able to render photo-realistic images using a model learned from data, we could turn the process of graphics rendering into a model learning and inference problem. This task can be modeled as an image-to-image translation model that can be used to create vivid virtual worlds by simply training it on new datasets.

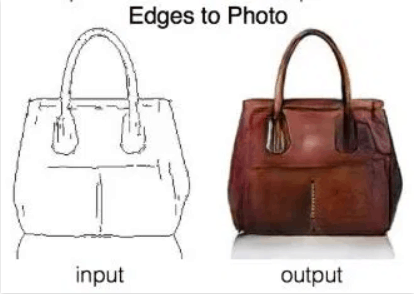

A simple example of an image-to-image translation task where the edges of an object are fed as an input to the model and the model generates the actual object.

The current state of art image-to-image translation Deep learning models are not able to generate high-resolution images because of various limitations. These include increased computational complexity for higher-dimensional images as well as a lack of ability to generalize as image size increases.

This paper addresses the 2 main concerns of vanilla adversarial learning i.e. 1) Difficulty in the generation of high-resolution images 2) Lack of details and realistic textures in generated high-resolution pictures.

Understanding image to image translation task

Before we jump into learning more about what an image-to-image translation task is, we must first understand how machine learning problems are formulated. It is virtually impossible to come up with a Deep learning model without formally defining the task it is supposed to perform. For image generation applications, researchers have formulated the task in such a way that the model is supposed to generate an image given another image.

This may sound daunting at first but if you abstract the entire definition of image-to-image translation as much as possible, it all boils down to a simple mathematical function.

In this image, X is the image that is input into our Deep learning model. The function F(x) that takes as input an image is nothing but our deep learning model. Furthermore, Y is the generated image which is the output of our deep learning model. Since X and Y can be completely different domains, we often say that our model maps images from one domain to the other. For example, in the image below, you can see that the input and output images are completely different. Hence we can say that they belong to completely different domains.

How are these functions/models designed?

The most popular approach to image-to-image translation problems these days is using adversarial learning. Adversarial learning involves 2 deep learning models brawling out in the open using all the data and making themselves better in the process. Standard adversarial learning consists of two networks that compete against each other. One of the networks is called a generator and the other is called a discriminator.

For image-to-image translation problems, the competition is framed in such a way that the generator tries to fool the discriminator whereas the discriminator attempts to tell apart the generated images versus real images. I will explain this with the help of an image as an example.

The input to the generator network is an image that needs to be mapped to its corresponding image of the other domain. Once the input image is fed into the generator network, we get generated images from the other end. After we have the generated images available with us, we shuffle the real and fake images and feed them into the discriminator. The discriminator then tries to tell apart the real from the fake images.

What is Pix2Pix then?

Pix2Pix can be thought of as the function that translates/transforms/maps a given image X to its corresponding image Y. Technically, it consists of the generator as well as discriminator networks but you would often find that the literature uses Pix2Pix and its generator i.e. UNet interchangeably.

Like any other GAN, Pix2Pix consists of a discriminator network (PatchGAN classifier) and a generator network (slightly modified UNet). You can find the PyTorch implementation of Pix2Pix in this repository.

U-NET

A U-Net consists of an encoder (downsampler) and decoder (upsampler). In the image below, the encoder is responsible for downsampling (reducing dimension and increasing channels) of the image whereas the upsampler performs the opposite functionality.

- Each block in the encoder is: Convolution -> Batch normalization -> Leaky ReLU

- Each block in the decoder is: Transposed convolution -> Batch normalization -> Dropout (applied to the first 3 blocks) -> ReLU

- There are skip connections between the encoder and decoder (as in the U-Net).

PatchGAN

The PatchGAN network tries to classify if each image patch is real or not real, as described in the pix2pix paper.

- Each block in the discriminator is: Convolution -> Batch normalization -> Leaky ReLU.

- The shape of the output after the last layer is (batch_size, 30, 30, 1).

- Each 30 x 30 image patch of the output classifies a 70 x 70 portion of the input image.

- The discriminator receives 2 inputs:

- The input image and the target image, which it should classify as real.

- The input image and the generated image (the output of the generator), which it should classify as fake.

Merits and Demerits of the standard pix2pix model

The standard pix2pix model is highly effective in performing image-to-image translation for images with smaller resolution ratios. The model was designed to perform translations across various unrelated domains. The model is highly robust and can generalize on the most difficult tasks given enough data. Furthermore, many researchers have gone ahead and created 3D models of the same to be trained on spatial data.

However, this model has its own limitations as discussed at the starting of this blog.

1) Difficulty in the generation of high-resolution images 2) Lack of details and realistic textures in generated high-resolution pictures. This arises due to the insufficient data existing in the lower layers of the encoder-decoder architecture. The lack of data in the encoded layers leads to problems in decoding the features. The decoder is not able to upsample the encoded features to a high-resolution image.

The Nvidia Pix2PixHD paper addresses these papers by introducing a novel generator architecture, multi-scale discriminator architecture, and an additional loss term in the pre-existing loss function.

Contribution of Pix2PixHD

- Coarse-to-fine generator: The paper breaks down the current Unet into 2 different sub-networks to increase resolution: G1 and G2. At the heart of the generator lies G1 which is wrapped around by G2. The global generator network operates at a resolution of 1024 × 512, and the local enhancer network outputs an image with a resolution that is 4× the output size of the previous one (2× along each image dimension). For synthesizing images at an even higher resolution, additional local enhancer networks could be utilized. For example, the output image resolution of the generator G = {G1, G2} is 2048 × 1024, and the output image resolution of G = {G1, G2, G3} is 4096 × 2048.

The figure may seem confusing at the first glance but all it does is virtually decomposes a single Unet into 2 different generators.

A semantic label map of resolution 1024×512 is passed through G1 to output an image of resolution 1024 × 512. The local enhancer network consists of 3 components: a convolutional front-end, a set of residual blocks, and a transposed convolutional back-end G. The resolution of the input label map to G2 is 2048 × 1024. Different from the global generator network, the input to the residual block is the element-wise sum of two feature maps: the output feature map of G2’s convolutional frontend, and the last feature map of the back-end of the global generator network G1. This helps in integrating the global information from G1 to G2.

During training, we first train the global generator and then train the local enhancer in the order of their resolutions. We then jointly fine-tune all the networks together. We use this generator design to effectively aggregate global and local information for the image synthesis task.

2. Multi-scale discriminators: High-resolution image synthesis requires the discriminator to have a large receptive field. This would require either a deeper network or larger convolutional kernels, both of which would increase the network capacity and potentially cause overfitting.

Hence, we use 3 discriminators that have an identical network structure but operate at different image scales. We will refer to the discriminators as D1, D2, and D3. Specifically, we downsample the real and synthesized high-resolution images by a factor of 2 and 4 to create an image pyramid of 3 scales. The discriminators D1, D2 and D3 are then trained to differentiate real and synthesized images at the 3 different scales, respectively. Creating discriminators that look at an image with different levels of receptive field allows the generator to learn about the larger structures as well as the finer details of an image.

Adding 3 discriminators would also change the loss function of the model.

This function ensures that outputs of all 3 discriminators are considered before parameter update.

3. Improved adversarial loss: The standard GAN loss consists of a discriminator-induced loss as well as an MAE loss (calculated wrt to the target image).

This function however doesn’t focus on the intermediate representations of the generator network. Allowing intermediate representations of the generator network to be induced into the loss functions enables the network to focus more on the intricate details during downsampling. This is done by extracting the intermediate representations of the discriminator and generator network and using a slightly modified MAE loss on the extractions.

T is the total number of layers and Ni denotes the number of elements in each layer.

The final loss function then becomes:

Code (check more from the official implementation)

- Generator architecture

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 |

class LocalEnhancer(nn.Module): def __init__(self, input_nc, output_nc, ngf=32, n_downsample_global=3, n_blocks_global=9, n_local_enhancers=1, n_blocks_local=3, norm_layer=nn.BatchNorm2d, padding_type='reflect'): super(LocalEnhancer, self).__init__() self.n_local_enhancers = n_local_enhancers ###### global generator model ##### ngf_global = ngf * (2**n_local_enhancers) model_global = GlobalGenerator(input_nc, output_nc, ngf_global, n_downsample_global, n_blocks_global, norm_layer).model model_global = [model_global[i] for i in range(len(model_global)-3)] # get rid of final convolution layers self.model = nn.Sequential(*model_global) ###### local enhancer layers ##### for n in range(1, n_local_enhancers+1): ### downsample ngf_global = ngf * (2**(n_local_enhancers-n)) model_downsample = [nn.ReflectionPad2d(3), nn.Conv2d(input_nc, ngf_global, kernel_size=7, padding=0), norm_layer(ngf_global), nn.ReLU(True), nn.Conv2d(ngf_global, ngf_global * 2, kernel_size=3, stride=2, padding=1), norm_layer(ngf_global * 2), nn.ReLU(True)] ### residual blocks model_upsample = [] for i in range(n_blocks_local): model_upsample += [ResnetBlock(ngf_global * 2, padding_type=padding_type, norm_layer=norm_layer)] ### upsample model_upsample += [nn.ConvTranspose2d(ngf_global * 2, ngf_global, kernel_size=3, stride=2, padding=1, output_padding=1), norm_layer(ngf_global), nn.ReLU(True)] ### final convolution if n == n_local_enhancers: model_upsample += [nn.ReflectionPad2d(3), nn.Conv2d(ngf, output_nc, kernel_size=7, padding=0), nn.Tanh()] setattr(self, 'model'+str(n)+'_1', nn.Sequential(*model_downsample)) setattr(self, 'model'+str(n)+'_2', nn.Sequential(*model_upsample)) self.downsample = nn.AvgPool2d(3, stride=2, padding=[1, 1], count_include_pad=False) def forward(self, input): ### create input pyramid input_downsampled = [input] for i in range(self.n_local_enhancers): input_downsampled.append(self.downsample(input_downsampled[-1])) ### output at coarest level output_prev = self.model(input_downsampled[-1]) ### build up one layer at a time for n_local_enhancers in range(1, self.n_local_enhancers+1): model_downsample = getattr(self, 'model'+str(n_local_enhancers)+'_1') model_upsample = getattr(self, 'model'+str(n_local_enhancers)+'_2') input_i = input_downsampled[self.n_local_enhancers-n_local_enhancers] output_prev = model_upsample(model_downsample(input_i) + output_prev) return output_prev class GlobalGenerator(nn.Module): def __init__(self, input_nc, output_nc, ngf=64, n_downsampling=3, n_blocks=9, norm_layer=nn.BatchNorm2d, padding_type='reflect'): assert(n_blocks >= 0) super(GlobalGenerator, self).__init__() activation = nn.ReLU(True) model = [nn.ReflectionPad2d(3), nn.Conv2d(input_nc, ngf, kernel_size=7, padding=0), norm_layer(ngf), activation] ### downsample for i in range(n_downsampling): mult = 2**i model += [nn.Conv2d(ngf * mult, ngf * mult * 2, kernel_size=3, stride=2, padding=1), norm_layer(ngf * mult * 2), activation] ### resnet blocks mult = 2**n_downsampling for i in range(n_blocks): model += [ResnetBlock(ngf * mult, padding_type=padding_type, activation=activation, norm_layer=norm_layer)] ### upsample for i in range(n_downsampling): mult = 2**(n_downsampling - i) model += [nn.ConvTranspose2d(ngf * mult, int(ngf * mult / 2), kernel_size=3, stride=2, padding=1, output_padding=1), norm_layer(int(ngf * mult / 2)), activation] model += [nn.ReflectionPad2d(3), nn.Conv2d(ngf, output_nc, kernel_size=7, padding=0), nn.Tanh()] self.model = nn.Sequential(*model) def forward(self, input): return self.model(input) |

- Enhanced loss function

This code is added to the forward pass of the Pix2PixHD model. You can check out the complete code here.

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 |

# GAN loss (Fake Passability Loss) pred_fake = self.netD.forward(torch.cat((input_label, fake_image), dim=1)) loss_G_GAN = self.criterionGAN(pred_fake, True) # GAN feature matching loss loss_G_GAN_Feat = 0 if not self.opt.no_ganFeat_loss: feat_weights = 4.0 / (self.opt.n_layers_D + 1) D_weights = 1.0 / self.opt.num_D for i in range(self.opt.num_D): for j in range(len(pred_fake[i])-1): loss_G_GAN_Feat += D_weights * feat_weights * \ self.criterionFeat(pred_fake[i][j], pred_real[i][j].detach()) * self.opt.lambda_feat # VGG feature matching loss loss_G_VGG = 0 if not self.opt.no_vgg_loss: loss_G_VGG = self.criterionVGG(fake_image, real_image) * self.opt.lambda_feat # Only return the fake_B image if necessary to save BW return [ self.loss_filter( loss_G_GAN, loss_G_GAN_Feat, loss_G_VGG, loss_D_real, loss_D_fake ), None if not infer else fake_image ] |

Multiple discriminators

|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 |

class MultiscaleDiscriminator(nn.Module): def __init__(self, input_nc, ndf=64, n_layers=3, norm_layer=nn.BatchNorm2d, use_sigmoid=False, num_D=3, getIntermFeat=False): super(MultiscaleDiscriminator, self).__init__() self.num_D = num_D self.n_layers = n_layers self.getIntermFeat = getIntermFeat for i in range(num_D): netD = NLayerDiscriminator(input_nc, ndf, n_layers, norm_layer, use_sigmoid, getIntermFeat) if getIntermFeat: for j in range(n_layers+2): setattr(self, 'scale'+str(i)+'_layer'+str(j), getattr(netD, 'model'+str(j))) else: setattr(self, 'layer'+str(i), netD.model) self.downsample = nn.AvgPool2d(3, stride=2, padding=[1, 1], count_include_pad=False) def singleD_forward(self, model, input): if self.getIntermFeat: result = [input] for i in range(len(model)): result.append(model[i](result[-1])) return result[1:] else: return [model(input)] def forward(self, input): num_D = self.num_D result = [] input_downsampled = input for i in range(num_D): if self.getIntermFeat: model = [getattr(self, 'scale'+str(num_D-1-i)+'_layer'+str(j)) for j in range(self.n_layers+2)] else: model = getattr(self, 'layer'+str(num_D-1-i)) result.append(self.singleD_forward(model, input_downsampled)) if i != (num_D-1): input_downsampled = self.downsample(input_downsampled) return result |

Working example

Please check this colab notebook for a working example.

Results

The modified network was able to generate insanely realistic high resolution images. These images contained proper structures in the image and also included fine details that was absent earlier when training a vanilla pix2pix model.

These are the images that were generated using the custom pix2pix model. The bottom left shows the instance map which was provided as an input to the pix2pix model and it was mapped to a realistic image of a face.

This is the synthetic image of a car’s view after providing the labeled instance map. As you might have noticed, the model is able to generate highly realistic images. The authors also propose to use this model to generate unique training examples by providing labeled data. This means that the authors plan on generating fake data. Availability of such a dataset would help researchers make giant leaps in domains where a huge amount of labeled training data is needed.